Research Interests📊

Before taking “Statistical Reasoning” (STA130) during my freshman year, I thought of Statistics as just another math course. Everything changed when I learned about the Poisson distribution. In astronomy, we often measure a star’s brightness by counting the arrival rate of photons. Even though we might expect photons to arrive at a steady average rate, they actually show up randomly. Each photon arrives independently, making the statistical patterns surprisingly simple and elegant.

Throughout my undergraduate years, I completed ~2 Statistics projects per semester and explored topics including Bayesian inference, time series analysis, regression, and machine learning.

I was also fortunate to have the opportunity to take a graduate Astrostatistics course with Prof. Joshua Speagle—the developer of the “dynesty” dynamic nested sampling package—which greatly broadened my perspective on data analysis in astronomy.

Currently, I’m most excited about Bayesian methods, MCMC techniques, and machine learning algorithms. Below, you can check out some highlight topics from my Statistics projects. The current update is for Bayesian Inference.

(When I find the time, I’ll add reproducible code and models on GitHub😉.)

Bayesian Inference

Relevant project:

-

Correcting time-series systematics in HST STIS transmission spectrum with jitter detrending(Astro)

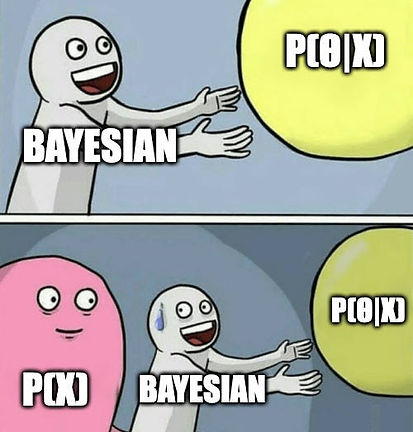

I remember when I first encountered Bayesian statistics: there seemed to be an endless list of concepts—Bayes’ Theorem, degrees of belief, priors, posteriors, updating. It all felt a bit overwhelming at first. But over time, one guiding principle helped it click:

In Bayesian statistics,

model parameters are random variables.

This idea might seem straightforward, but it’s a significant shift in perspective. In many traditional (frequentist) statistical frameworks, parameters are fixed and unknown constants. In the Bayesian world, parameters are treated as uncertain quantities, each with its own probability distribution.

Bayesian in

Astronomy

In my own exoplanet research, Bayesian methods are extremely invaluable. For example, when estimating atmospheric properties from noisy spectral data, the Bayesian framework naturally accommodates uncertainties and lets me incorporate prior knowledge—such as bounds on atmospheric composition or assumptions from planet formation models. The result is a more nuanced and flexible interpretation of what the data can tell us about these distant worlds.

Beyond the formula

To me, Bayesian statistics is more than a formula; it’s a mindset. It encourages me to think about parameters as uncertain quantities, to explicitly state our assumptions, and to continuously update our understanding as new data arrive.

While other mindsets—frequentist statistics, causal inference, machine learning—have their own value, Bayesian thinking complements them by emphasizing uncertainty, beliefs, and dynamic learning.